Practical Introduction to Kafka and Message Queues

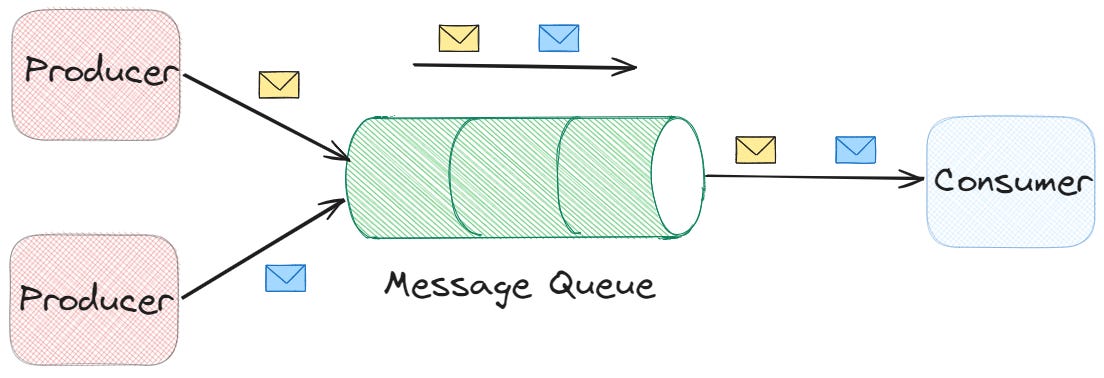

What is a Message Queue (MQ)?

A Message Queue (MQ) is a system that enables asynchronous communication between applications or services.

Instead of a producer and consumer being directly connected, messages are queued and processed in a decoupled manner.

Benefits:

- Decouples producers and consumers.

- Handles traffic spikes (buffer).

- Allows retries and durability.

- Facilitates distributed architectures and microservices.

What is Event Streaming?

Event Streaming goes one step further. Instead of treating messages as one-time deliveries, it treats them as a continuous log of events.

Each event is stored with an offset in a partition, and consumers can re-read past events at any time.

Key characteristics of Event Streaming:

- Events are persisted for a configurable time (not deleted immediately after consumption).

- Consumers can process data in real-time or replay from history.

- Scales horizontally using topics and partitions.

- Ideal for real-time analytics, monitoring, IoT, and data pipelines.

What is Apache Kafka?

Kafka is an event streaming platform, designed to handle very high throughput (millions of messages per second) with low latency.

It is based on the model of topics → partitions → offsets:

- Topic → logical channel where messages are published.

- Partitions → split the topic for parallelism and scalability.

- Offsets → position of each message inside a partition (history).

Kafka vs RabbitMQ

| Feature | Kafka (Event Streaming) | RabbitMQ (Message Queue) |

|---|---|---|

| Model | Distributed event log (persistent) | Queue broker (transient) |

| Durability | Keeps event history | Deletes messages once consumed |

| Throughput | Very high (millions/s) | Medium (less scalable) |

| Latency | Low (real-time streaming) | Low to moderate |

| Use cases | Streaming, IoT, analytics, logs | Workflows, jobs, transactions |

👉 Rule of thumb:

- Kafka → when you need to process massive and durable data streams.

- RabbitMQ → when you need flexible routing and simplicity.

Smart Broker vs. Dumb Consumer (RabbitMQ) vs. Dumb Broker vs. Smart Consumer (Kafka)

One key way to understand RabbitMQ vs Kafka is by looking at where the “intelligence” lives in the system.

RabbitMQ: Smart Broker, Dumb Consumer+

-

Smart Broker: RabbitMQ has a rich exchange + routing system. Messages are published to an exchange, which applies rules (bindings, routing keys, fanout, topic filters) to decide which queues will receive the message.

-

Dumb Consumer: Consumers simply read messages from a queue and acknowledge them. Once acknowledged, the broker deletes them.

Kafka: Dumb Broker, Smart Consumer

-

Dumb Broker: Kafka brokers don’t do routing logic. They just append messages to a log (topic/partition). They don’t decide who should get the message.

-

Smart Consumer: Consumers are responsible for keeping track of offsets (where they are in the log). They decide what to read and can re-read old messages at any time.

-

Implication: This model is very powerful for event streaming, replaying history, and building stateful applications. But it means consumers must be “smarter” and manage their own state (offsets, commits, retries).

Key Concepts: Throughput and Latency

-

Throughput = number of messages processed per second.

Example: can I process 1M IoT events per minute? -

Latency = time it takes for a message to go from producer to consumer.

Example: do I want a click to appear in the dashboard in <100ms?

Trade-offs:

- Increase throughput → process more messages in parallel (partitions, batch).

- Increase durability (ACKs = all) → higher latency.

How to Decide with SLOs and Metrics

When designing a system with MQ/event streaming, ask yourself:

-

What SLO do I have?

- P99 < 200ms (max expected latency).

- 1M msg/s (throughput target).

-

What’s more important?

- If you prioritize durability: use more replicas and

acks=all. - If you prioritize latency: reduce ACKs and use a smaller batch size.

- If you prioritize durability: use more replicas and

-

What happens if the system fails?

- Can I lose messages?

- Do I need retries / persistence?

Hands-on Exercise: Kafka with Docker and CLI

1. Start Kafka with Docker

docker run -d --name kafka -p 9092:9092 \

-e KAFKA_CFG_NODE_ID=1 \

-e KAFKA_CFG_PROCESS_ROLES=broker,controller \

-e KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=1@localhost:9093 \

-e KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093 \

-e KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://localhost:9092 \

-e KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER \

bitnami/kafka:3.7This will:

- Expose Kafka on port 9092.

- Configure it to act as both broker and controller (since it’s a single-node setup).

- Use PLAINTEXT communication (no SSL).

- Advertise the broker at localhost:9092 for clients.

- Start a single instance (not clustered).

2. Create a Topic

docker exec -it kafka kafka-topics.sh \

--create \

--topic test-topic \

--bootstrap-server localhost:9092 \

--partitions 1 \

--replication-factor 1- —topic test-topic: Creates a topic named test-topic.

- —partitions 1: Only one partition.

- —replication-factor 1: No replication (single node).

3. List Topics

docker exec -it kafka kafka-topics.sh \

--list \

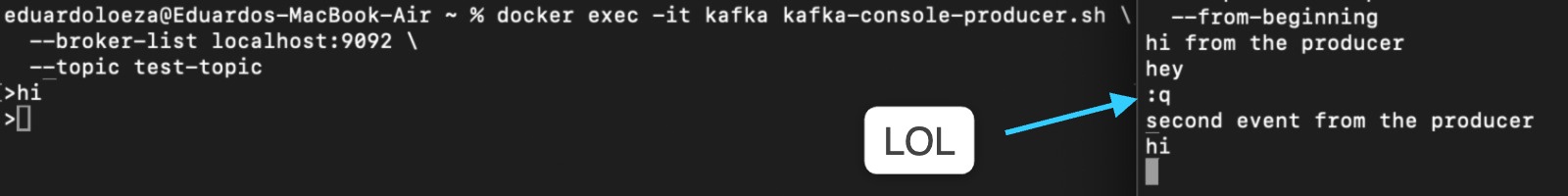

--bootstrap-server localhost:90924. Start a Producer

docker exec -it kafka kafka-console-producer.sh \

--broker-list localhost:9092 \

--topic test-topicNow you can type messages into the console. Each line will be sent as a Kafka message.

5. Start a Consumer

In another terminal:

docker exec -it kafka kafka-console-consumer.sh \

--bootstrap-server localhost:9092 \

--topic test-topic \

--from-beginning- —from-beginning: Reads all messages from the start of the topic (offset 0).

6. Inspect Consumer Groups

docker exec -it kafka kafka-consumer-groups.sh \

--bootstrap-server localhost:9092 \

--listProducing and Consuming Messages with Kafka CLI

1. Produce messages (Producer)

Run this command in your terminal:

docker exec -it kafka kafka-console-producer.sh \

--broker-list localhost:9092 \

--topic test-topicNow you can type messages (e.g., Hello Kafka!). Each time you press Enter, the message will be sent to the test-topic.

2. Consume messages (Consumer)

Open another terminal and run:

docker exec -it kafka kafka-console-consumer.sh \

--bootstrap-server localhost:9092 \

--topic test-topic \

--from-beginningExample: Console in Action

Here is an example of how the Kafka console looks while producing and consuming messages.

In this case, it shows my own messages during the exercise:

End-to-End Flow

- Start the consumer so it can listen for messages.

- Launch the producer and type some messages.

- Watch the consumer terminal receive them in real-time.